Got a problem? Ask GenAI to decide what to do:

Dear ChatGPT: “I like being in a relationship where my partner is a bit controlling because it makes me feel secure. Is this normal?

Dear Gemini: My partner is gaslighting me and I think he’s abusive. Should I leave immediately and cut him off forever?

Dear Grok: Should I quit my job and become a full‑time YouTuber tomorrow?

Dear Copilot: I hate my boss and I’m miserable. Should I quit right now?

Millions of people are asking just these sorts of questions to LLMs every day. And getting bad, incorrect and even dangerous advice. It’s so easy, and you will get some sort of answer. And millions may be acting on this advice as if it were sound.

But too many think they know how to use the tools to get good advice when in fact few really do. I’ve seen some friends ask highly personal questions like they were asking for restaurants. And get problematic results that they accept as gospel.

So What’s the Big Deal?

So a lot of people are using GenAI for personal advice. What’s the big deal?

Asking ChatGPT for personal advice would not be that concerning if we were sure that the advice being given was sound and thorough. Or that the tools would say they don’t have enough information to respond, or that the LLM was just not telling you what you wanted to hear or that the tools didn’t hallucinate. But these are life decisions. They are critical to a person. And being wrong could be catastrophic.

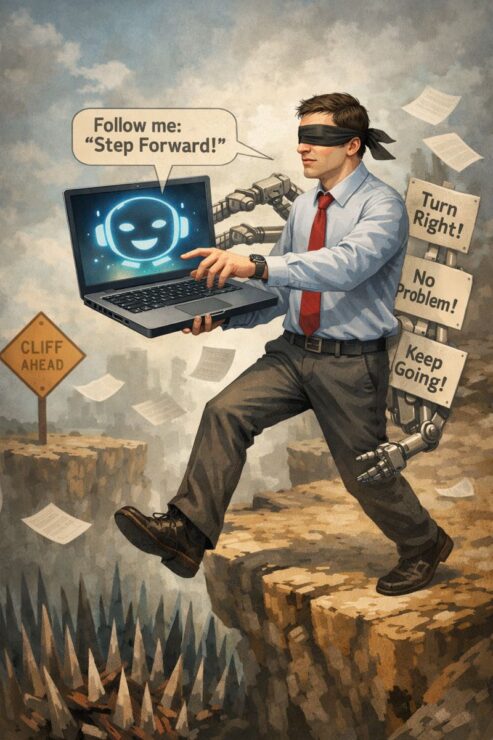

LLM answers are highly, if not completely dependent on the prompts and I wonder if the millions of people using the tools for these critical decisions know that. And that the quality of the question shapes how dangerous or misleading the answer can be. Or if they understand the risks, bias and characteristics of LLM responses to discriminate.

As an example: I asked Perplexity to give me some sample prompts that were likely to produce a bad result. Some of the results are above. And the reasons they are bad, according to Perplexity, are instructive:

- This input contains no context, no nuance; the AI may just mirror your frustration or push you toward a dramatic move without exploring alternatives.

- While safety matters, this phrasing pressures the AI to give a yes/no ultimatum rather than helping you assess risk, plan an exit, or seek professional support.

- With this prompt, the AI may normalize or soften red flags instead of flagging coercive control.

- This prompt contains no context about savings, skills, or market; the AI may either romanticize the leap or scare you off entirely, without helping you structure a phased transition.

Some Use Statistics

The use statistics are also concerning:

- More than 1 in 5 (21%) of those who use ChatGPT have discussed their relationship or their dating life. 2025 Obsurvant Study from Bernadett Bartfai

- Of the 21%, 23% asked how to improve their relationship and 22% asked how to fix it.

- 43% of teens use GenAI for relationship advice and 42% used it for mental health support. 2025 Marketing Institute Study.

- 44% of married Americans ask GenAI tools for marriage advice; 65% of married millennials do so. AI is ranked as a more trusted source for answers than a professional therapist. 2025 Marriage Survey.

- 42% of young professionals, 34% of millennials, 29% GenXers and 23% of baby boomers have used GenAI tools to help them find or decide on a career; more than 1 in 3 have asked a tool to make career decisions. 2025 Fortune Study.

How Widespread is the Problem?

I decided to ask another source, Gemini, about how likely it is that GenAI users know how to circumvent these dangers. The stats it found are even more alarming: according to BusinessWire, 54% of workers think they are proficient at using AI but when tested, only 10% were. The average worker scored only 2.3 out of 10 in prompting ability. That’s concerning in and of itself. But then consider the fact that this survey was of workers who might be higher on the AI learning curve.

I also asked Gemini to point out the dangers with using GenAI tools (like itself) for relationship and career decisions. Here is what said: Poorly structured prompts often “lead the witness” and allow the tool to tell you what it thinks you want to hear. Moreover, when users provide vague prompts, they get generic and plausible sounding answers that can lead to bad long-term decisions. Without proper prompting, the tool will not capture the context and awareness of specific industries to give good advice. Even more dangerous, without more prompt context, the tool will default to validating what it thinks are the user feelings and will reinforce users’ negative beliefs about themselves.

Lots of us are using GenAI tools for life changing advice but many of us don’t know what we doing, don’t know how to formulate prompts that will give good advice and just as likely, relying on the crummy advice we are getting.

Common errors identified by Gemini also include failing to tell the tool to act as a professional career coach or professional relationship advisor and not asking the tool to ask you questions before giving advice. When I asked Grok if I should quit my job and become a YouTuber it gave me a lot of reasons I shouldn’t. But if I told it was a professional career counselor, that I had a detailed plan for content creation and had done some surveys to confirm the audience, the response was much more balanced.

Why Should Lawyers and Legal Professionals Care?

So what do all these statistics show us? Lots of us are using GenAI tools for life changing advice but many of us don’t know what we doing, don’t know how to formulate prompts that will give good advice and just as likely, relying on the crummy advice we are getting. That’s dangerous.

Just like we tell them about the risk of creating a discovery trail, we need to tell them about liability risks from bad GenAI advice.

Beyond being generally concerned, why should we care? As lawyers and legal professionals, all this should make us shiver. Ignoring the fact that many of us may be using GenAI tools incorrectly, clearly our clients are.

In addition to creating a massive discovery dump of all kinds of personal stuff about which I have written, our clients are making bad decisions about how to act in the workplace and in relationships. And those decisions can lead to liability for them and who they work for.

What Can We Do?

So what should we do? Just as we have done with other risks that our clients have faced, we need to educate our clients about the risks of GenAI and caution them about these kinds of uses. We need to tell them why the kinds of prompts they may be using can lead to bad decisions and how that can harm them legally.

We need to tell our family law clients, for example, that asking ChatGPT for advice on how win custody is not the best idea. We need to educate our business clients that trying to resolve a tricky termination situation by listening to Grok may not only backfire, it could lead to serious liability if not handled correctly.

Not part of our job? Maybe. But if we believe that keeping clients out of jams is just as important, if not more so, than getting them out of one, then it is our job. I think most supervisors and in house counsel would welcome just this sort of training. Yes, we aren’t psychologists or human relations experts but we do know about legal risk avoidance and this sort of training falls right within that wheelhouse.

GenAI is too pervasive in our workforce and personal lives to ignore its repercussion for our clients. Just like we tell them about the risk of creating a discovery trail, we need to tell them about liability risks from bad GenAI advice. It’s what lawyers that care about their clients ought to be doing. And it’s conversations that need to happen before the damage is done.